Humans are naturally capable of learning throughout their lives without forgetting what they already know. They can also infer and comprehend new concepts from just a few, or even no examples. However, deep neural networks lack such abilities, and typically require large-scale, static datasets.

Many real-world applications involve data that is dynamically evolving, but sparse in nature. For instance, patient data is continuously generated, but it may be limited in cases of rare diseases and difficult to share due to privacy concerns. Motivated by this, I aim to build practical models that can reason and learn more like humans: learn incrementally, generalize from restricted data, and remain stable under distribution shifts.

2026

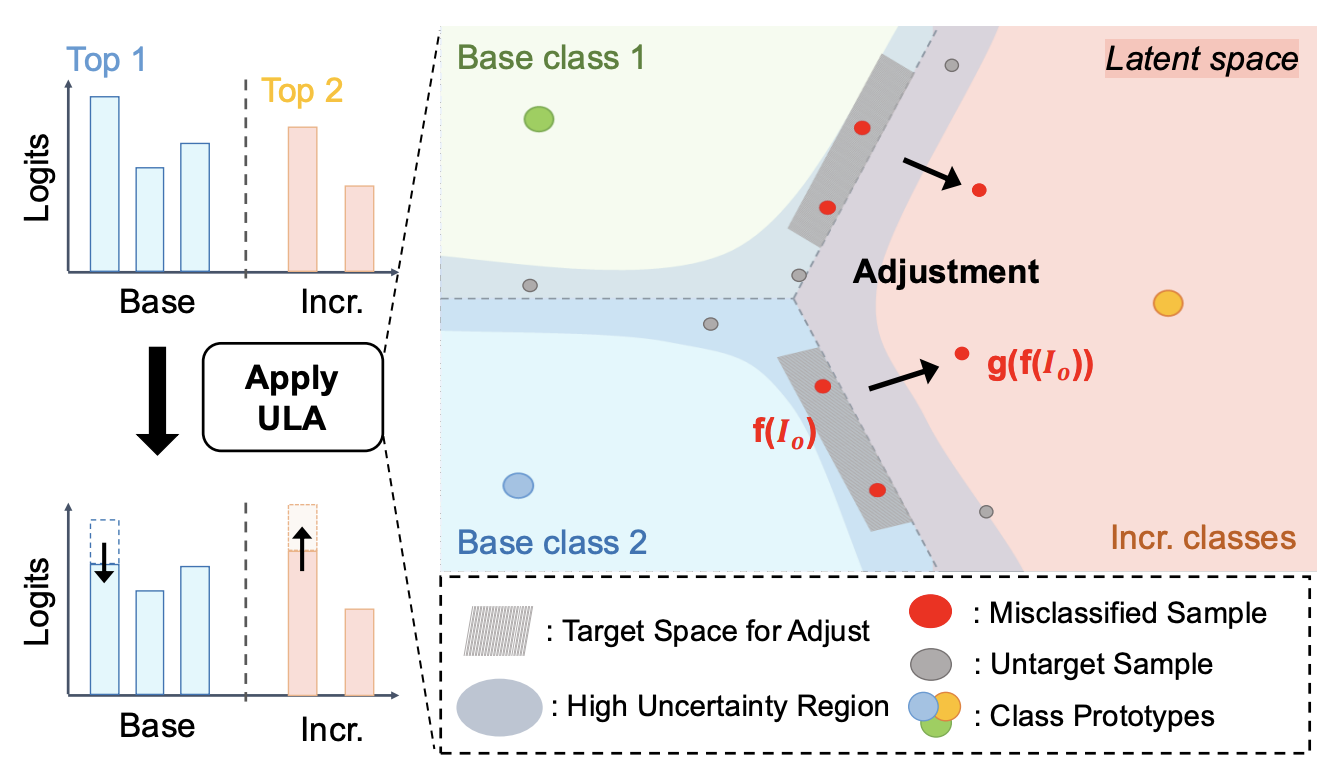

Training-Free Uncertainty-Guided Logit Adjustment for Few-Shot Class-Incremental Learning

Sungwon Woo, Dongjun Hwang, Shiwon Kim, Junsuk Choe, Jongho Nang†

Conference on Computer Vision and Pattern Recognition (CVPR) 2026 Findings

TBD

# continual learning # few-shot learning

Training-Free Uncertainty-Guided Logit Adjustment for Few-Shot Class-Incremental Learning

Sungwon Woo, Dongjun Hwang, Shiwon Kim, Junsuk Choe, Jongho Nang†

Conference on Computer Vision and Pattern Recognition (CVPR) 2026 Findings

TBD

# continual learning # few-shot learning

2025

Does Prior Data Matter? Exploring Joint Training in the Context of Few-Shot Class-Incremental Learning

Shiwon Kim*, Dongjun Hwang*†, Sungwon Woo*, Rita Singh†

International Conference on Computer Vision (ICCV) 2025 Workshop on Continual Learning in Computer Vision (CLVision)

[TL;DR] [Paper] [Poster] [Code]

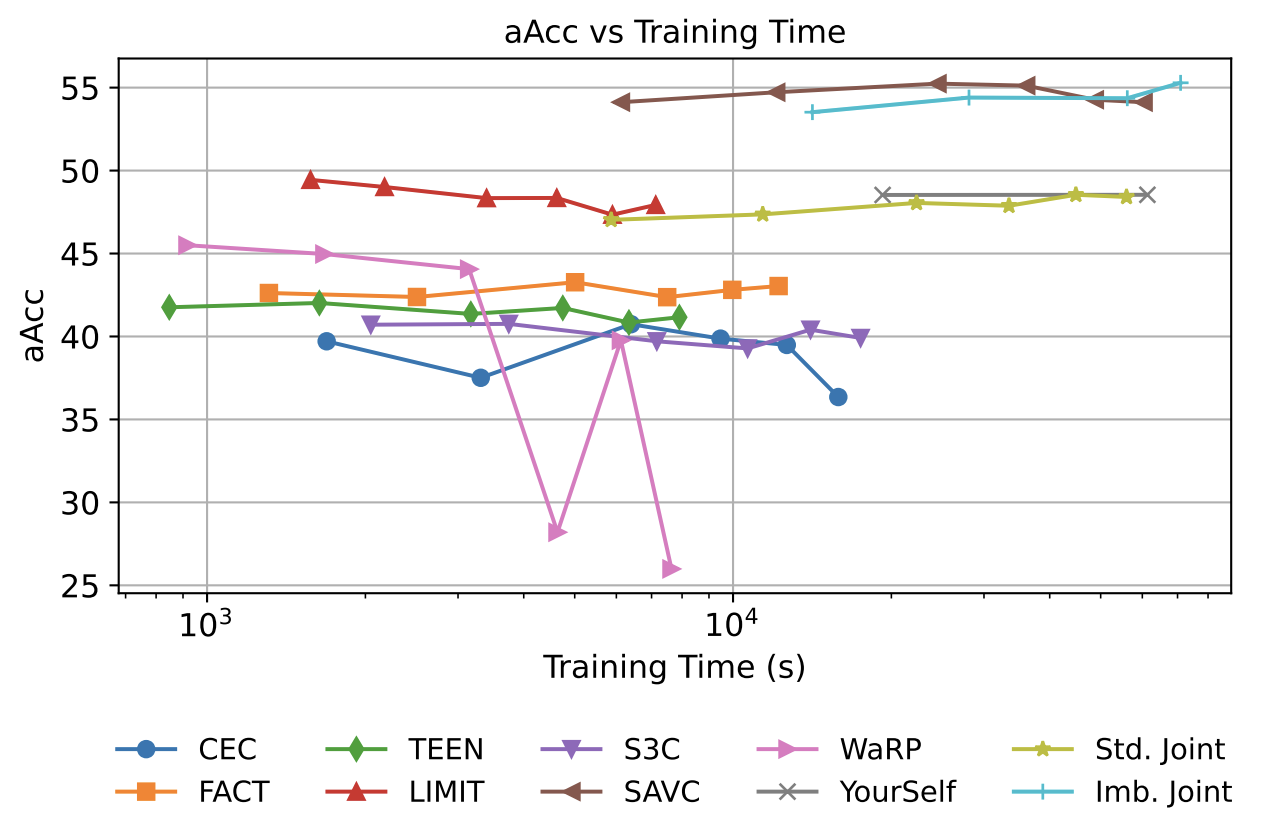

Challenged the assumption of limited access to prior data in few-shot class-incremental learning, and compared joint training with incremental learning to empirically assess the practical impact of full data access on model performance.

# continual learning # few-shot learning

Does Prior Data Matter? Exploring Joint Training in the Context of Few-Shot Class-Incremental Learning

Shiwon Kim*, Dongjun Hwang*†, Sungwon Woo*, Rita Singh†

International Conference on Computer Vision (ICCV) 2025 Workshop on Continual Learning in Computer Vision (CLVision)

[TL;DR] [Paper] [Poster] [Code]

Challenged the assumption of limited access to prior data in few-shot class-incremental learning, and compared joint training with incremental learning to empirically assess the practical impact of full data access on model performance.

# continual learning # few-shot learning

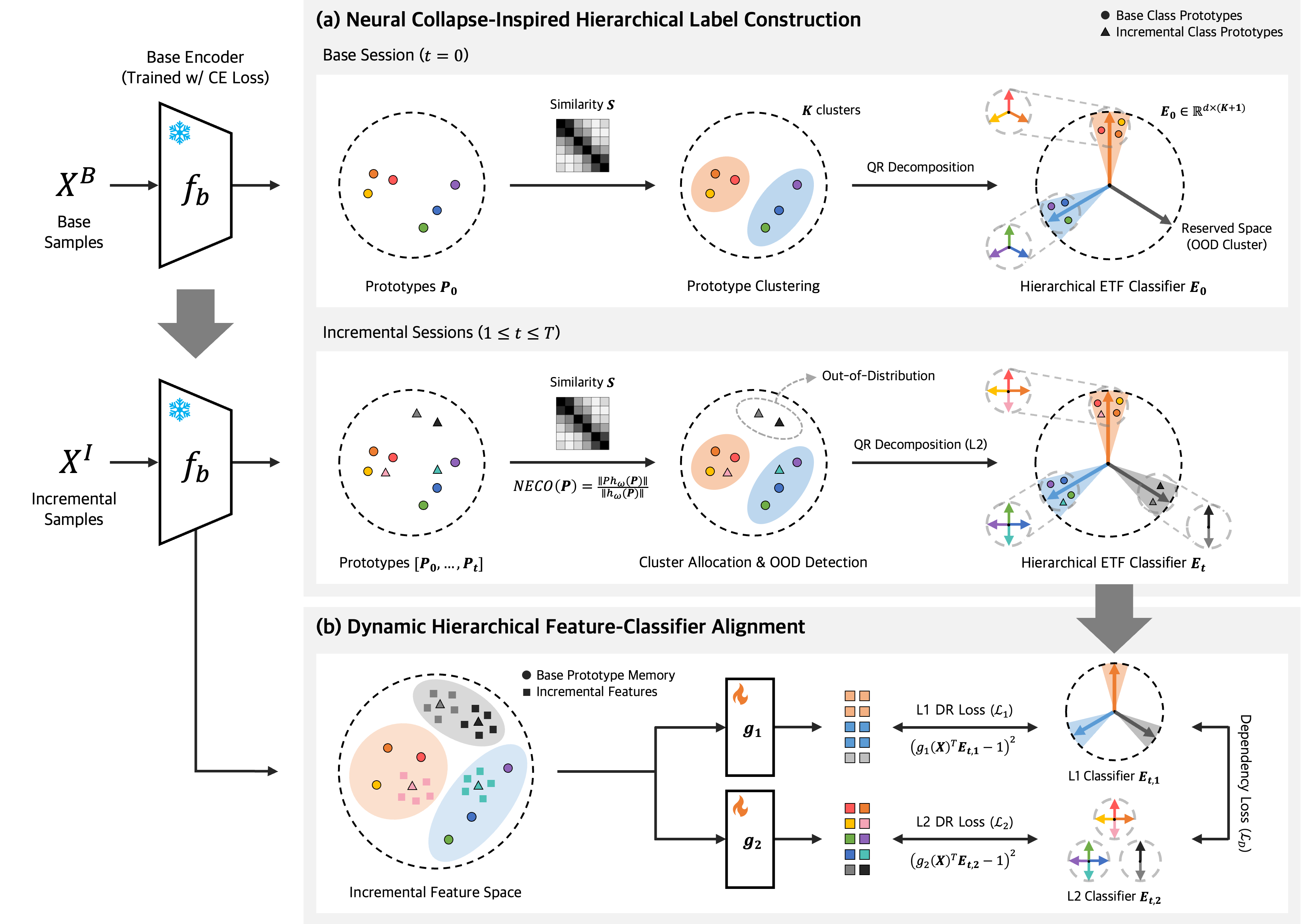

Debiasing Few-Shot Class-Incremental Learning via Dynamic Feature-Classifier Alignment

Shiwon Kim

Master's Thesis, Yonsei University

Proposed a dynamic hierarchical equiangular tight frame (DH-ETF) classifier that mitigates base-class bias by preserving prior knowledge through a fixed cluster-level ETF, while enabling flexible accommodation of new classes using adaptable class-level ETFs within each cluster.

# continual learning # few-shot learning # neural collapse

Debiasing Few-Shot Class-Incremental Learning via Dynamic Feature-Classifier Alignment

Shiwon Kim

Master's Thesis, Yonsei University

Proposed a dynamic hierarchical equiangular tight frame (DH-ETF) classifier that mitigates base-class bias by preserving prior knowledge through a fixed cluster-level ETF, while enabling flexible accommodation of new classes using adaptable class-level ETFs within each cluster.

# continual learning # few-shot learning # neural collapse

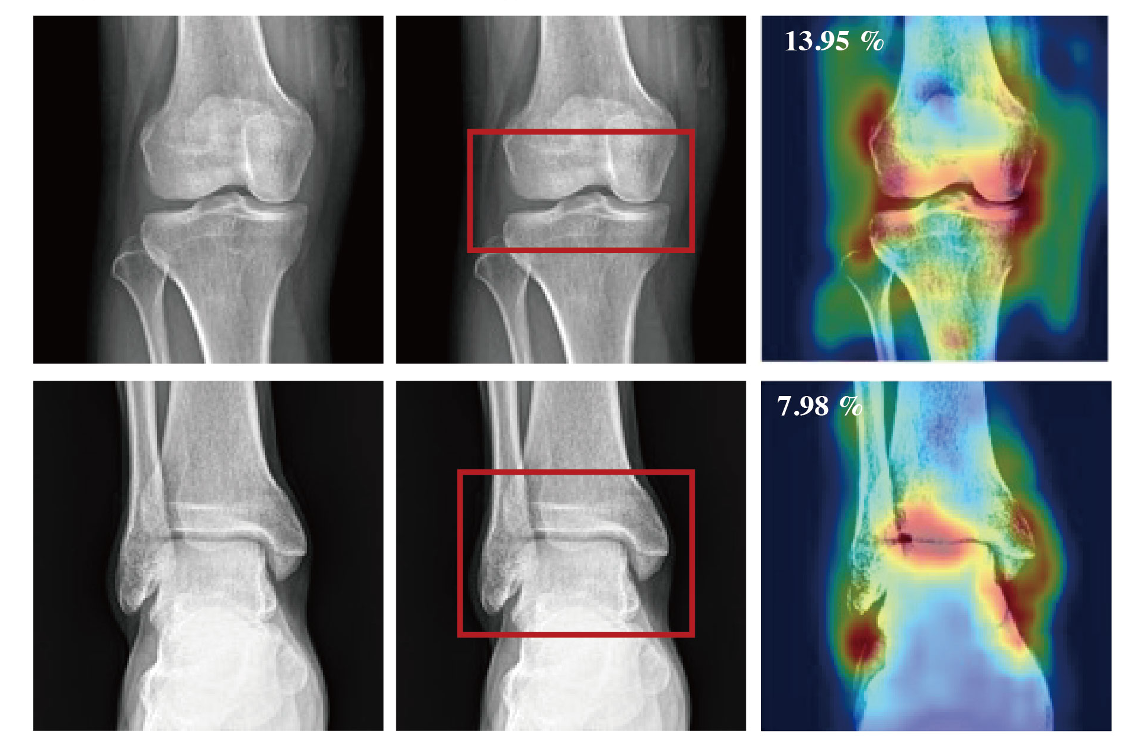

Classification Models for Arthropathy Grades of Multiple Joints Based on Hierarchical Continual Learning

Bong Kyung Jang*, Shiwon Kim*, Jae Yong Yu, JaeSeong Hong, Hee Woo Cho, Hong Seon Lee, Jiwoo Park, Jeesoo Woo, Young Han Lee†, Yu Rang Park†

La Radiologia Medica (IF 2024: 9.7)

[TL;DR] [Paper] [Slides] [Code]

Developed and validated a continual learning framework for arthropathy grade classification scalable across multiple joints, using hierarchically labeled radiographs of the knee, elbow, ankle, shoulder, and hip from three tertiary hospitals.

# medical imaging # continual learning

Classification Models for Arthropathy Grades of Multiple Joints Based on Hierarchical Continual Learning

Bong Kyung Jang*, Shiwon Kim*, Jae Yong Yu, JaeSeong Hong, Hee Woo Cho, Hong Seon Lee, Jiwoo Park, Jeesoo Woo, Young Han Lee†, Yu Rang Park†

La Radiologia Medica (IF 2024: 9.7)

[TL;DR] [Paper] [Slides] [Code]

Developed and validated a continual learning framework for arthropathy grade classification scalable across multiple joints, using hierarchically labeled radiographs of the knee, elbow, ankle, shoulder, and hip from three tertiary hospitals.

# medical imaging # continual learning

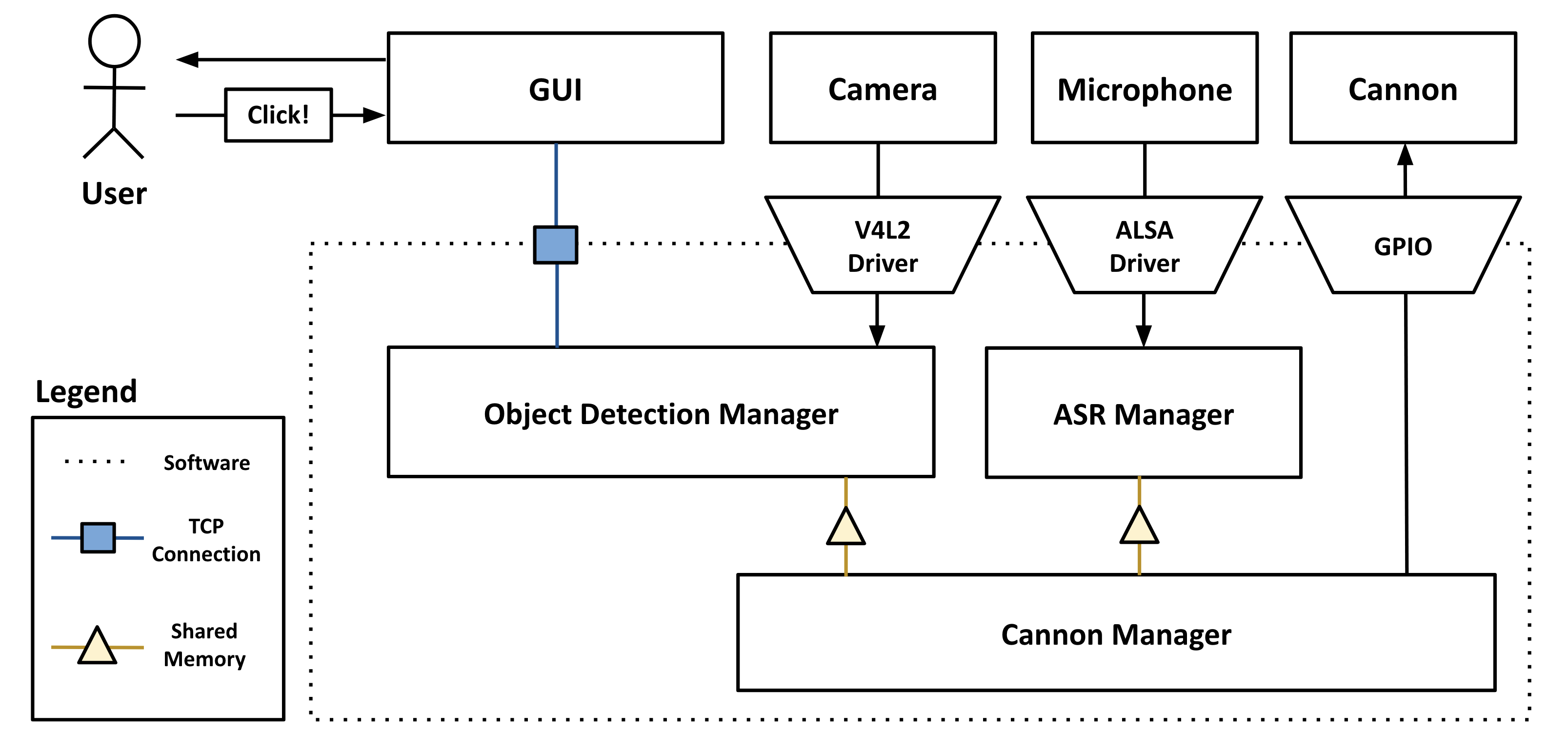

AI-Driven Automated Target Prioritization and Engagement

Kyungjin Kim, Shiwon Kim, Seungjae Baek, Soomin Chung, Chani Jung, Dongjun Hwang, Vijay Sai

Studio Project: AI on the Edge with Robotics - 2024 CMU Intensive AI Education Program

Implemented a real-time nearest-target tracking algorithm and a shoot (and don't shoot) logic based on fine-tuned YOLOv11s. Deployed the system on Jetson Orin Nano and demonstrated engagement of both stationary and moving targets.

# robotics # object detection

AI-Driven Automated Target Prioritization and Engagement

Kyungjin Kim, Shiwon Kim, Seungjae Baek, Soomin Chung, Chani Jung, Dongjun Hwang, Vijay Sai

Studio Project: AI on the Edge with Robotics - 2024 CMU Intensive AI Education Program

Implemented a real-time nearest-target tracking algorithm and a shoot (and don't shoot) logic based on fine-tuned YOLOv11s. Deployed the system on Jetson Orin Nano and demonstrated engagement of both stationary and moving targets.

# robotics # object detection

2024

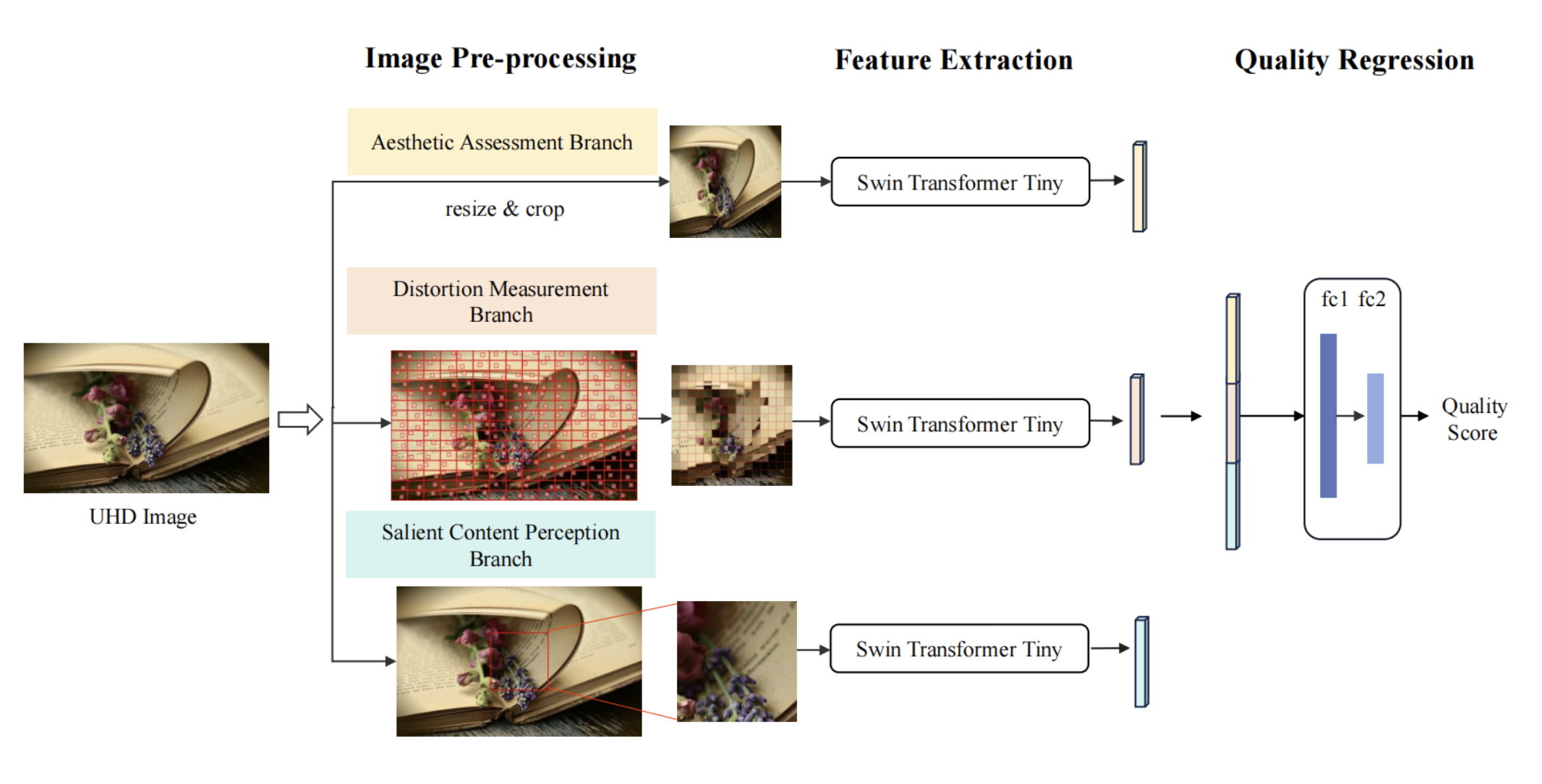

Image Quality and Abstract Perception Evaluation

Eryn Ma, Hail Song, Yuchen Dai, Shiwon Kim

Course Project - 11-785 Introduction to Deep Learning (Fall 2024, CMU)

Enhanced the interpretation of abstract image perceptions by combining CLIP-IQA and UIQA with a multi-branch backbone, demonstrating superior accuracy and faster convergence compared to existing image quality assessment (IQA) methods.

# vision-language # image quality assessment # abstract perception

Image Quality and Abstract Perception Evaluation

Eryn Ma, Hail Song, Yuchen Dai, Shiwon Kim

Course Project - 11-785 Introduction to Deep Learning (Fall 2024, CMU)

Enhanced the interpretation of abstract image perceptions by combining CLIP-IQA and UIQA with a multi-branch backbone, demonstrating superior accuracy and faster convergence compared to existing image quality assessment (IQA) methods.

# vision-language # image quality assessment # abstract perception

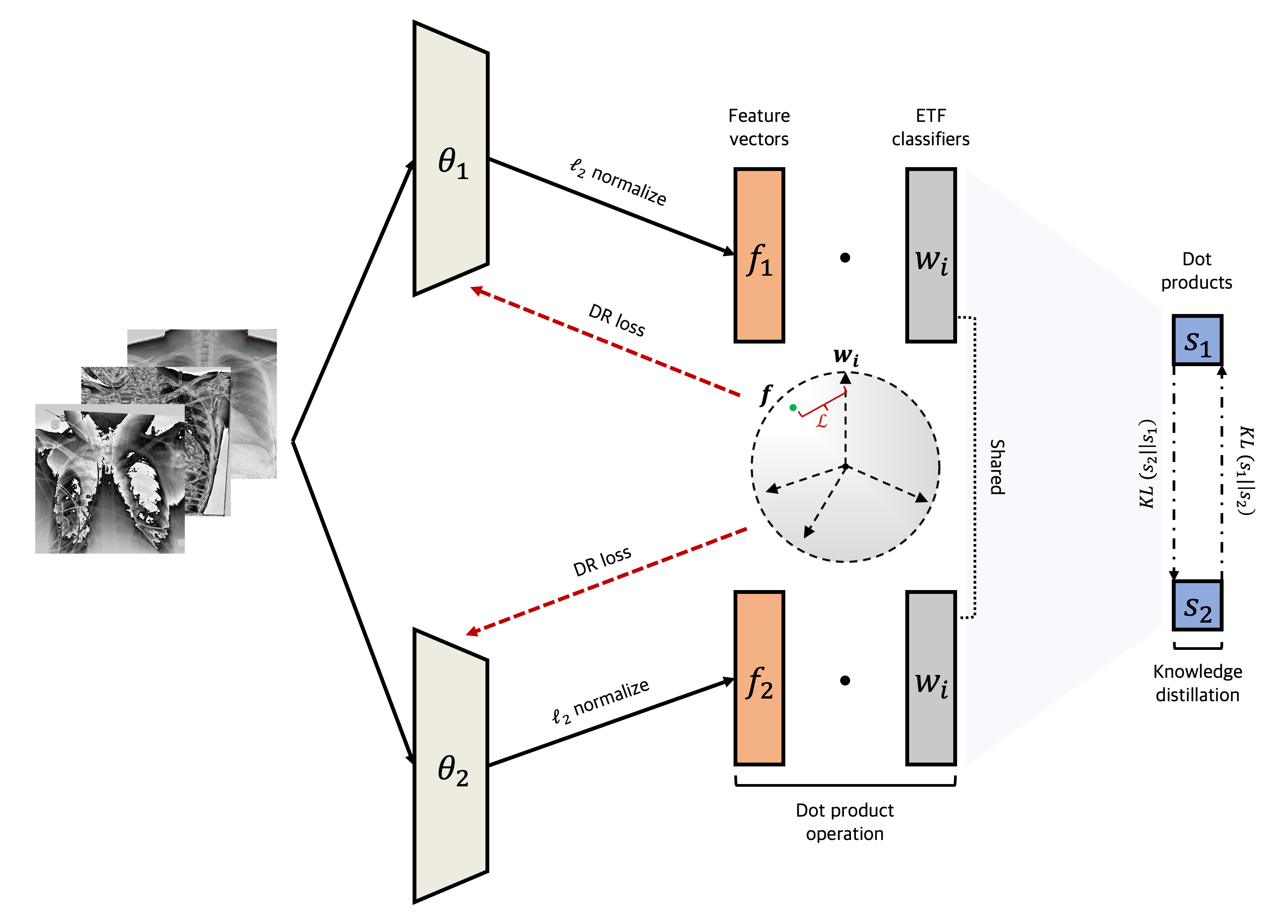

Robust Medical Image Classification Against Data Contamination and Poisoning

Min Kyoon Yoo, Jihae So, Shiwon Kim, Ho Seung Kang, Donghyeok Seo

2024 Yonsei Digital Healthcare Cybersecurity Competition

2nd Place

Designed a deep mutual learning framework that jointly trains two networks to learn a shared representation space anchored by a fixed equiangular tight frame (ETF) classifier, improving model robustness and generalization.

# medical imaging # data poisoning # neural collapse

Robust Medical Image Classification Against Data Contamination and Poisoning

Min Kyoon Yoo, Jihae So, Shiwon Kim, Ho Seung Kang, Donghyeok Seo

2024 Yonsei Digital Healthcare Cybersecurity Competition

2nd Place

Designed a deep mutual learning framework that jointly trains two networks to learn a shared representation space anchored by a fixed equiangular tight frame (ETF) classifier, improving model robustness and generalization.

# medical imaging # data poisoning # neural collapse

2023

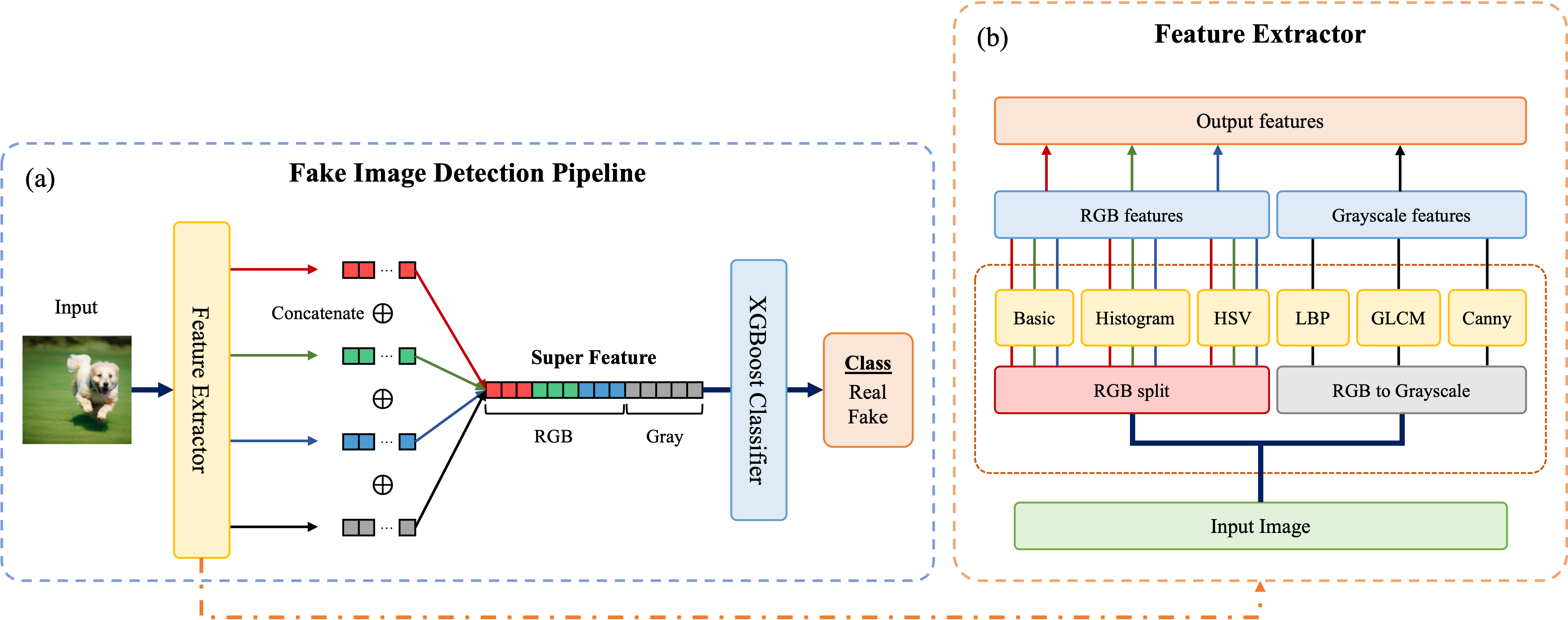

Statistical Feature-Based Memory-Efficient Machine Learning Pipeline for Fake Image Detection

Shiwon Kim, Min Kyoon Yoo, Hee Min Yang

2023 Yonsei Digital Healthcare Cybersecurity Competition

Digital Healthcare Human Resource Development Program

Developed a machine learning-based fake image detection pipeline that leverages pixel-level statistics, texture patterns, and edge information, achieving higher accuracy with lower memory usage than CNN-based deep learning approaches.

# medical imaging # synthetic data # machine learning

Statistical Feature-Based Memory-Efficient Machine Learning Pipeline for Fake Image Detection

Shiwon Kim, Min Kyoon Yoo, Hee Min Yang

2023 Yonsei Digital Healthcare Cybersecurity Competition

Digital Healthcare Human Resource Development Program

Developed a machine learning-based fake image detection pipeline that leverages pixel-level statistics, texture patterns, and edge information, achieving higher accuracy with lower memory usage than CNN-based deep learning approaches.

# medical imaging # synthetic data # machine learning